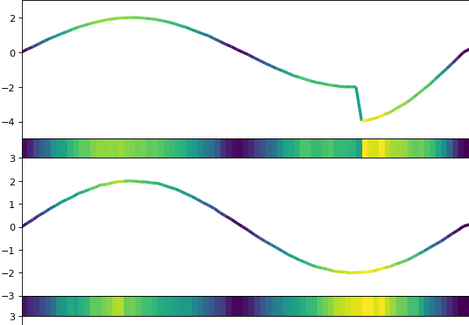

Explainable AI for time series data

While Explainable AI has been massively researched for computer vision, the field of time series has not yet received the same research attention. One reason is the fact, that images have semantics that offers interpretability, e.g. by highlighting regions within the image — as an example the highlighting a cat’s fur. The same is not the case for time series.

Publications:

Journal paper:

Explainable AI for Time Series Classification: A review, taxonomy and research directions

Andreas Theissler, Francesco Spinnato, Udo Schlegel, Riccardo Guidotti (2022),

IEEE, IEEE Access

Open access: Link to paper

Explainable AI for time series classification and anomaly detection: Current state and open issues.

Keynote (invited talk) at Explainable AI for time series (XAI-TS) Workshop at ECML/PKDD 2023

Slides

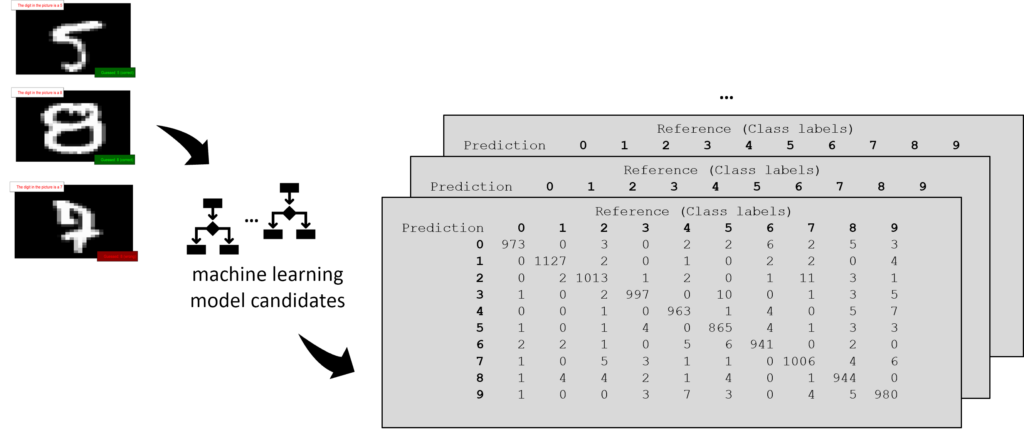

Evaluating and comparing multi-class classifiers

During the development of Machine Learning systems, a large number of model candidates is generated (different model types, hyperparameters, or feature subsets). Expert knowledge is key to evaluate, understand and compare model candidates and hence to control the training process.

Publications:

Interpretable Machine Learning for Predictive Maintenance

In the field of predictive maintenance (PdM), machine learning (ML) has gained importance over the last years. Accompanying this development, an increasing number of papers use non-interpretable ML to address PdM problems. While ML has achieved unprecedented performance in recent years, the lack of model explainability or interpretability may manifest itself in a lack of trust.

Publications:

Interpretable Machine Learning: A brief survey from the predictive maintenance perspective.

Vollert, Simon and Atzmueller, Martin and Theissler, Andreas (2021).

IEEE International Conference on Emerging Technologies and Factory Automation (ETFA 2021).

Predictive Maintenance enabled by Machine Learning: Use cases and challenges in the automotive industry.

Andreas Theissler and Judith Perez-Velazquez and Marcel Kettelgerdes and Gordon Elger (2021).

Reliability Engineering & System Safety, ISSN 0951-8320, vol. 215,

DOI: https://doi.org/10.1016/j.ress.2021.107864

(the paper discusses several topics, interpretability being one of them)

Explainable AI for multivariate EEG time series

EEG time series are characterized by (a) spectral, (b) spatial and (c) temporal dimensions. Since all three dimensions are crucial for seizure detection, we argue that an explanation of an algorithmic prediction must unify these three dimensions.

Publications:

XAI4EEG: Spectral and spatio-temporal explanation of Deep Learning-based Seizure Detection in EEG time series,

Dominik Raab, Andreas Theissler, Myra Spiliopoulou (2022),

Springer, Neural Computing & Applications

Open access: Link to paper

Explainable AI: how far we have come and what’s left for us to do

Keynote (invited talk) at XKDD Workshop at ECML/PKDD 2023

Slides

Explaining is not enough: Four open problems in Explainable AI for AI with user interaction.

Andreas Theissler (2024).

AAAI Spring Symposium on User-Aligned Assessment of Adaptive AI Systems. Stanford 2024.

Explaining image classification based on coherent prototypes and patches

Current prototype-based classification of image data often leads to prototypes with overlapping semantics where several prototypes are similar to the same image parts. These prototypes do not add to interpretability, rather reduce interpretability by violating the principle of sparsity.

In a research work — mainly conducted by a PhD student at an industry partner and at Tuebingen University — we propose a novel method to obtain semantically coherent prototypes.

Publications:

SPARROW: Semantically Coherent Prototypes for Image Classification

Stefan Kraft, Klaus Brölemann, Andreas Theissler, Gjergji Kasneci (2021).

The British Machine Vision Conference (BMVC) 2021

https://www.bmvc2021-virtualconference.com/assets/papers/0896.pdf