Andreas Theissler’s research

Research on Machine Learning

and Human-centered AI:

For humans, our society and our environment

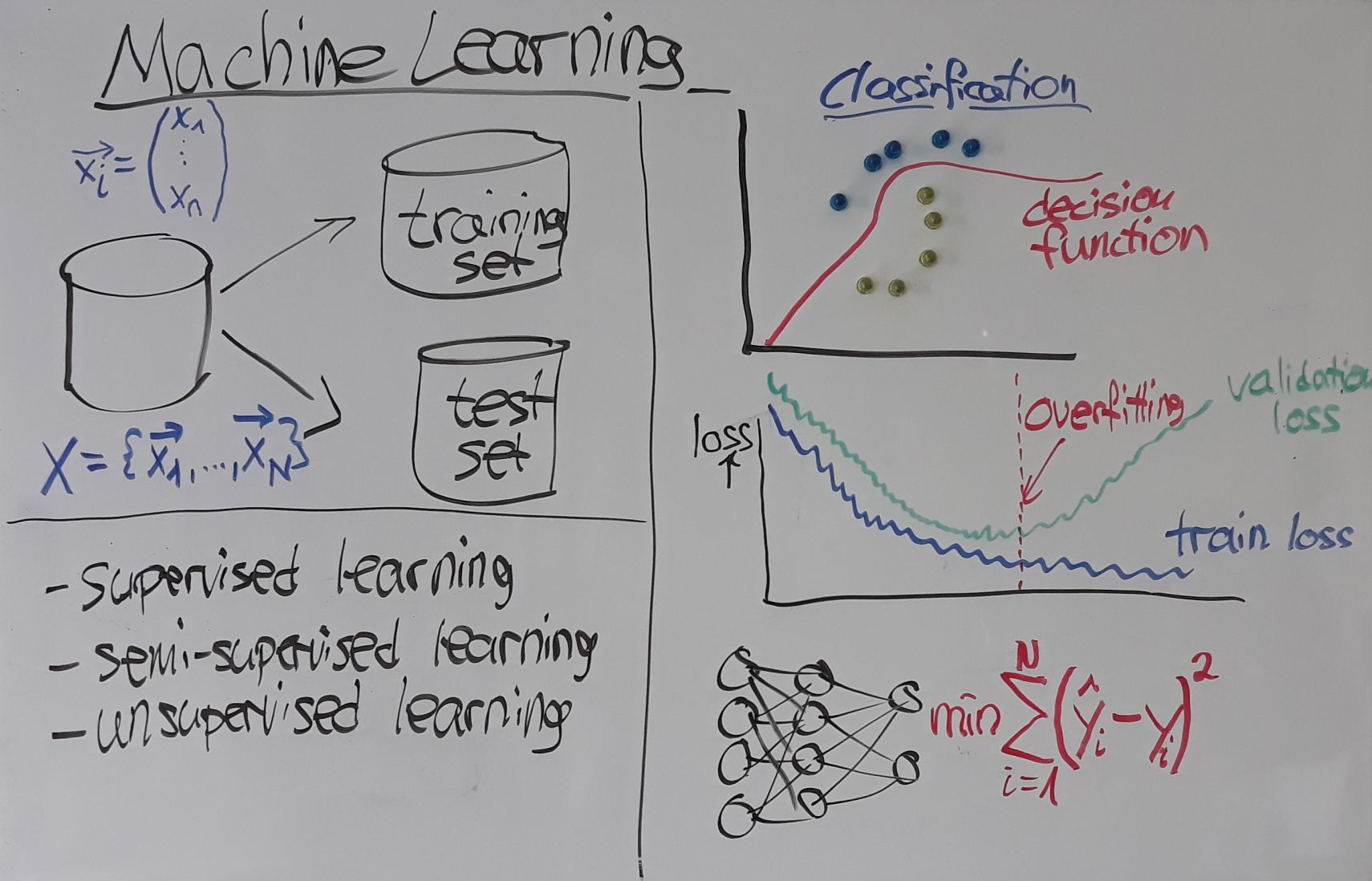

This website addresses research questions from the fields of Machine Learning in applications, Machine Learning fundamentals, and human-centered AI:

Examples of our research for machine learning in applications are fault detection and quality assurance in automotive systems or manufacturing using ML-based anomaly detection, explainable ML-based seizure detection in medical EEG data, tactile sensing in robotics, predictive maintenance, and Deep Learning-based analysis of solar signals for exoplanet detection. This line of research addresses the recurring question when bringing Machine Learning to applications:

How can we utilize, adapt, combine or enhance state-of-the-art machine learning for real-world applications

in order to be beneficial for us humans or our environment?

Examples of research in the field of human-centered AI are explainable artificial intelligence (XAI) and learning from partly or fully unlabelled data – addressing the overriding question:

How can we enable, improve, evaluate, or understand machine learning by creating an interplay of humans and AI ?

On this website you will find information, publications and in some cases videos on reserarch I have conducted in the aforementioned fields. Research is a never-ending process… so you might want to check the website regularly, read through the provided news ticker, or follow me on researchgate.

Andreas Theissler

Professor at Justus Liebig University Giessen, Germany (previously at Aalen University of Applied Sciences, Germany)

### News: ###April 2025: Best paper award at IEEE EDUCON 2025 for the paper: Engaging students in scientific writing: The STRaWBERRY checklist framework with LLM-based paper draft assessment. A big thank you to the co-authors and the award committee.2024: Paper on text-to-image models at IEEE COMPSAC2024: Paper on the use of LLMs to learn programming in R accepted at IEEE Educon2024: Paper on XAI for User-Aligned Assessment of Adaptive AI Systems at AAAI Spring Symposium01/2024 Project funding: „AI alliance: data quality“ (funded by EU, federal state of Baden-Wuerttemberg and others)Keynote (invited talk) at Explainable AI for time series (XAI-TS) Workshop at ECML/PKDD 2023: Explainable AI for time series classification and anomaly detection: Current state and open issues.Keynote (invited talk) at XKDD Workshop at ECML/PKDD 2023: Explainable AI: how far we have come and what’s left for us to do01/2023: project funding „AI for SMEs“ (funded by EU, federal state of Baden-Wuerttemberg and others)Sept 2022: Journal paper in IEEE Access accepted: „Explainable AI for Time Series Classification: A review, taxonomy and research directions“Sept 2022: Journal paper in Springer Neural Computing accepted: „XAI4EEG: Spectral and spatio-temporal explanation of Deep Learning-based Seizure Detection in EEG time series“Sept 2022: Best paper award for PhD student at IEEE conference (IES Young Professionals & Students Paper Award): „Visual Detection of Tiny Objects for Autonomous Robotic Pick-and-Place Operations“ published at IEEE ETFA 2022.

Interpretable Machine Learning / Explainable AI

Learning from unlabeled data

Machine Learning in Applications

Anomaly Detection